Many health-risk behaviors—such as overeating, smoking, drinking, substance abuse, and medication non-adherence—correlate with increased morbidity and mortality. Eliminating these behaviors can help prevent several diseases. However, this requires understanding, knowing, and altering what people put into their mouth. Current efforts to understand behaviors associated with detecting what people put in their mouth are limited and rely on inaccurate and biased self-reports. Detecting these behaviors objectively and in real time, learning to automatically predict them, and adaptively intervening to problematic behaviors will pave the way for novel behavioral interventions.

Wearable video cameras can automatically detect these health-risk behaviors in real time, enabling personalized and adaptive interventions, while providing valuable visual confirmation of wearer’s activities to an end user (e.g., clinical team, health coach, and dietitian). However, video recordings pose serious bystander privacy concerns, which is a leading cause for people’s inhibition to wearing cameras in daily life. To address this concern, new methods must be developed to automatically detect health-risk behaviors and record videos of only the wearer and objects in close proximity to the wearer, while de-identifying distant objects or bystanders. Infrared (IR) sensor arrays have the potential to provide independent temperature readings, which allows determining whether an object is near or far. Applying machine learning algorithms to IR sensor array data enables detection of nearby objects (e.g., wearer’s hand, objects in the hand). These algorithms can further infer what the wearer is putting in his or her mouth.

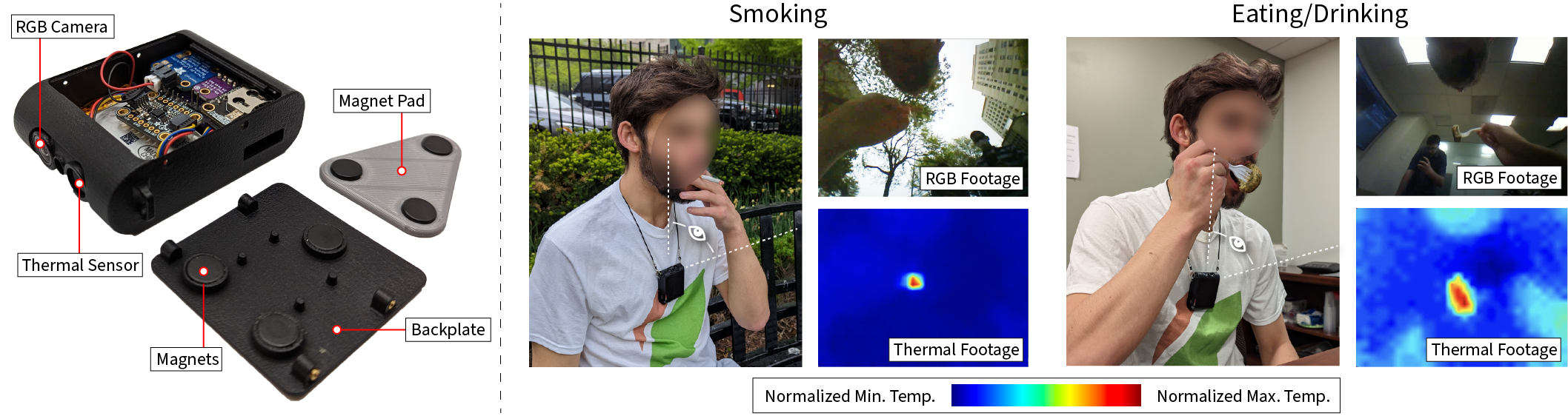

HabitSense is a neck-worn wearable platform that combines RGB, thermal, and accelerometer sensors to detect health-risk behaviors like eating and smoking in real-time. The device is designed to prioritize user privacy and effectiveness, providing an innovative solution for real-world health monitoring applications.

Clinically-Informed Design: HabitSense was developed in collaboration with 36 weight management and smoking cessation experts. Their insights were crucial in shaping a device that meets real-world healthcare needs, ensuring alignment with clinical workflows and enhancing adoption in healthcare settings.

User-Centric Development: Extensive feedback from 105 participants guided the design of HabitSense, resulting in a lightweight, comfortable, and unobtrusive device optimized for all-day wear. The platform effectively detects eating and smoking gestures using a combination of RGB and thermal sensors while maintaining user privacy.

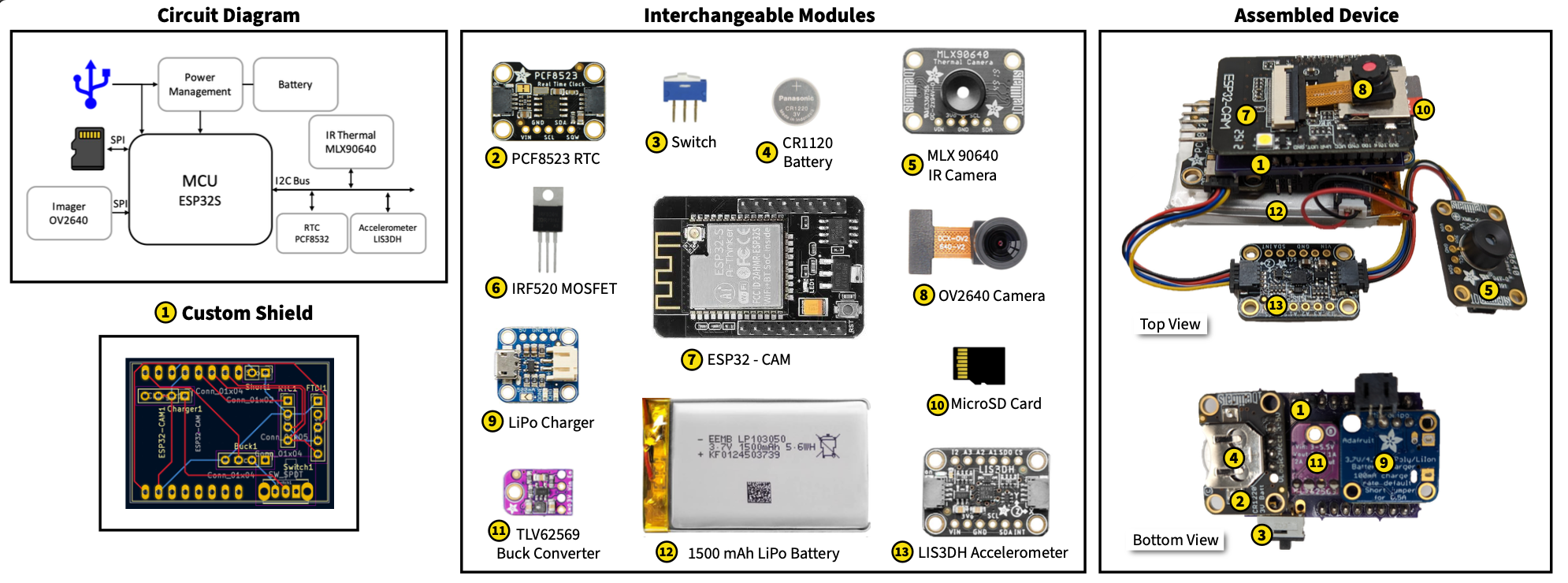

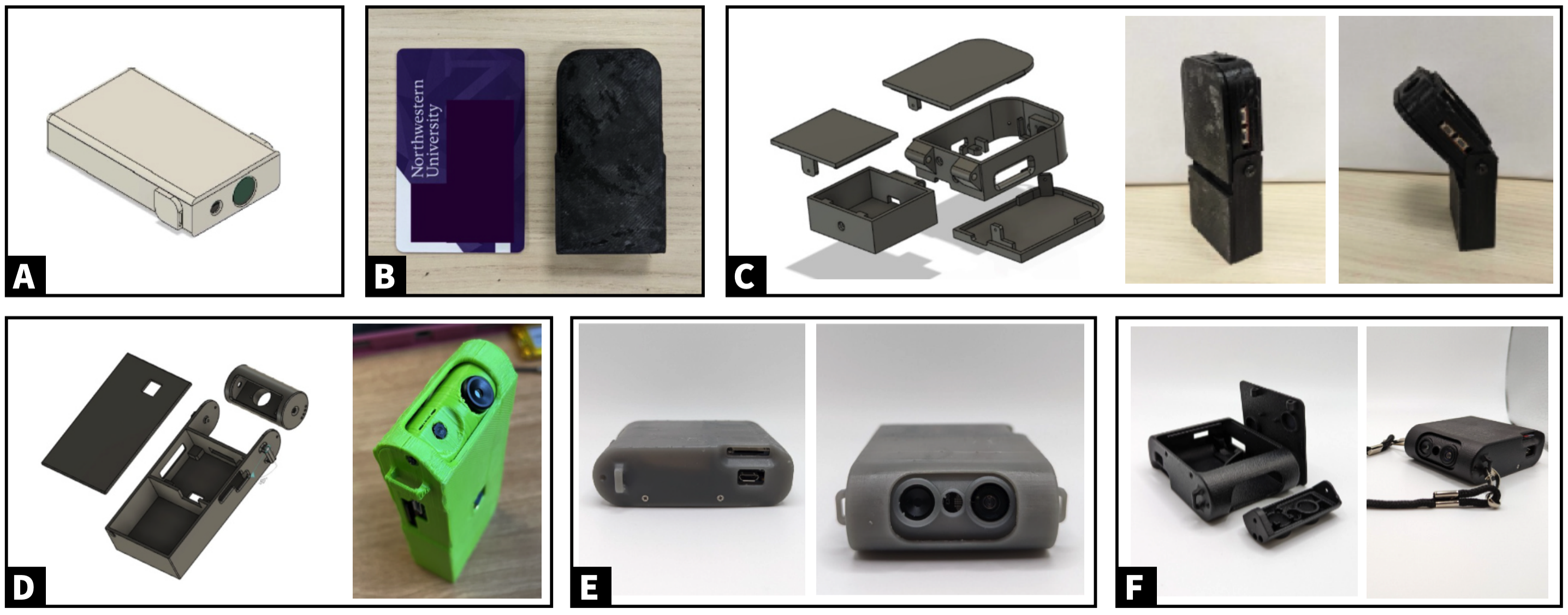

Modular and Expandable Architecture: HabitSense features a modular design that allows for easy repair, sensor upgrades, and component additions. This flexibility not only reduces costs and electronic waste but also ensures the device can be adapted for various health-monitoring applications, such as monitoring UV exposure or medication adherence.

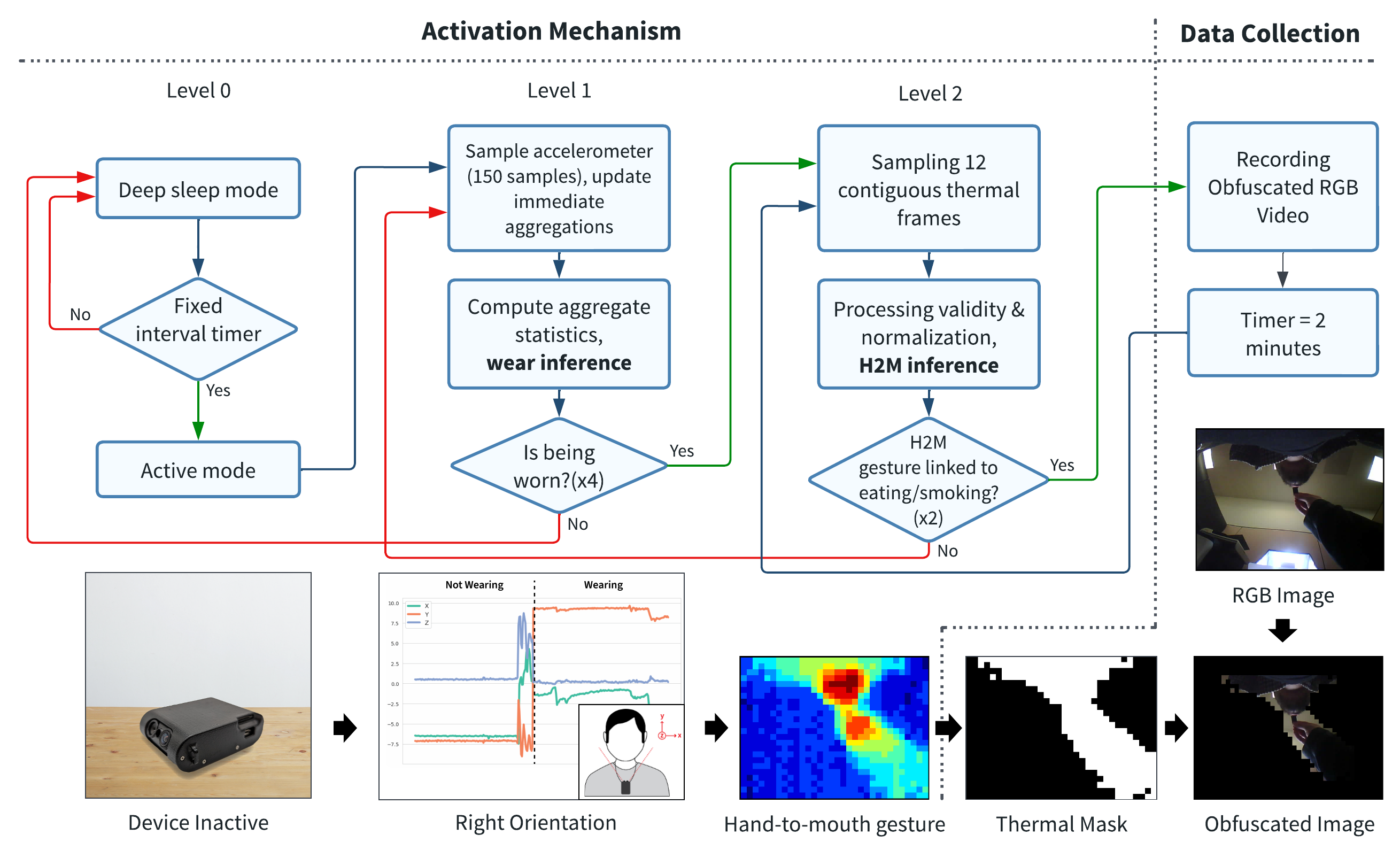

Advanced Privacy Protection: The platform employs the S.E.C.U.R.E. (Sensor-Enabled Control for Ubiquitous Recording and Evaluation) algorithm, which activates recording only during detected health-risk behaviors. This smart activation reduces data storage by 48% and extends battery life by 30%, balancing privacy with functionality.

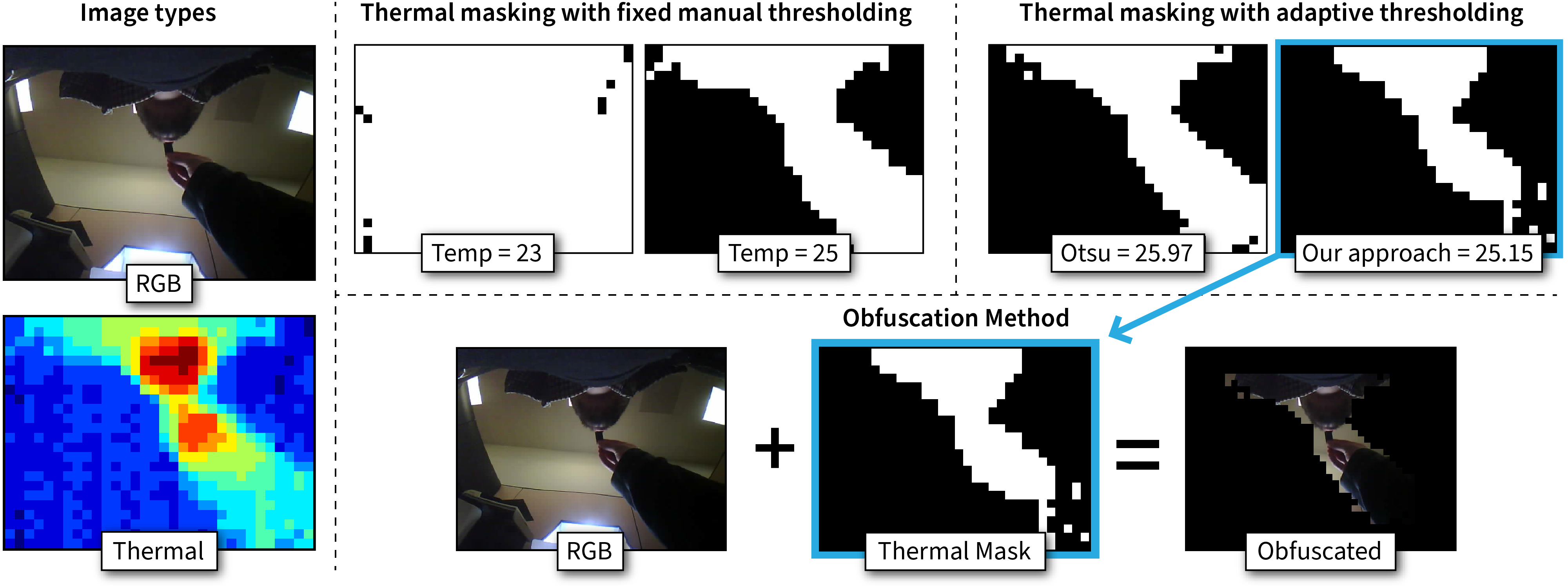

On-Device Obfuscation Algorithm: To further protect privacy, HabitSense utilizes a novel on-device obfuscation algorithm. This algorithm uses thermal data to mask background details while keeping relevant foreground activities like hand-to-mouth gestures visible, ensuring user privacy while maintaining the accuracy of behavior detection.

Energy-Efficient Operation: The device employs a multi-tiered activation strategy that leverages accelerometer data, thermal sensors, and intelligent algorithms to minimize power consumption. This approach ensures HabitSense can operate for a full day on a single charge, providing reliable and continuous monitoring.

HELP Tool for Enhanced Data Annotation: The HabitSense Exploration and Labeling Platform (HELP) was developed to streamline the annotation of multimodal data collected by HabitSense. This tool improves the accuracy and efficiency of labeling behaviors, enhancing the training of AI models used for behavior detection.

AI-Powered Gesture Recognition: HabitSense incorporates state-of-the-art AI models that achieved a 92% F1-score in detecting hand-to-mouth gestures, critical for recognizing eating and smoking behaviors. The models are robust against varying conditions, such as low light and intense movement, ensuring reliable performance in diverse settings.

Comprehensive Real-World Evaluation: The device was tested in real-world settings with 15 participants, capturing 768 hours of footage. The evaluation demonstrated high user acceptability and effectiveness in detecting health-risk behaviors, confirming HabitSense’s suitability for use in everyday environments.

Iterative Design Process: HabitSense was developed through multiple design iterations, from Gen 1 to the final version, incorporating feedback from users and addressing challenges related to comfort, usability, and data accuracy. This iterative process resulted in a refined device that is practical and user-friendly.

HabitSense offers a groundbreaking approach to real-time, privacy-preserving monitoring of health-risk behaviors. With its clinician-informed design, user-centric development, advanced privacy features, and robust performance, it provides a feasible and effective tool for real-world health applications. Beyond detecting eating and smoking, HabitSense has the potential to monitor other behaviors, such as medication adherence, further expanding its scope in healthcare. Join us on this journey to revolutionize health monitoring!

Bonnie Spring

Professor of Preventive Medicine

Aggelos Katsaggelos

Professor of Electrical and Computer Engineering

Josiah Hester

Associate Professor of Interactive Computing and Computer Science

Have a question? let us now and we’ll get back to you ASAP!