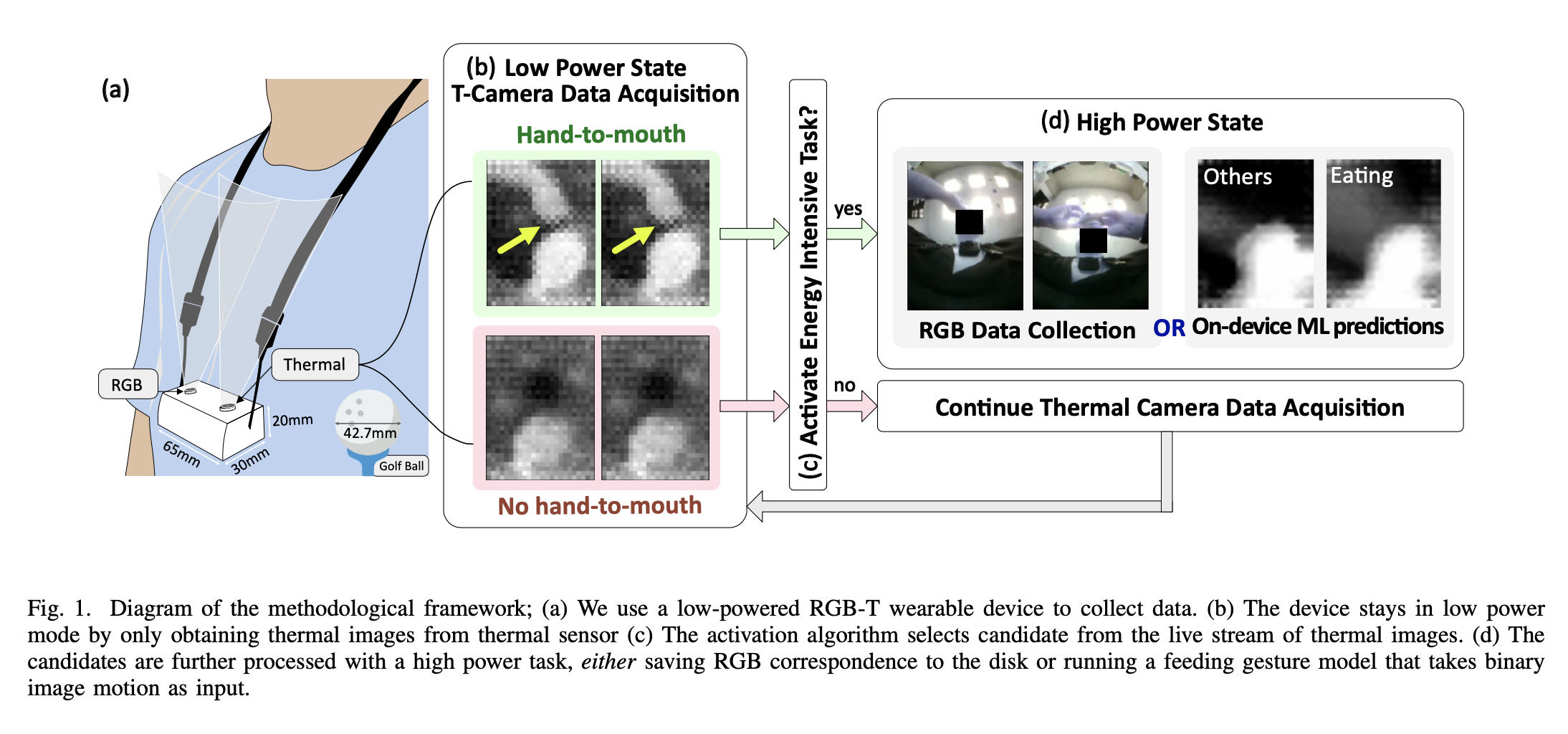

Researchers have been leveraging wearable cameras to both visually confirm and automatically detect individuals’ eating habits. However, energy-intensive tasks such as continuously collecting and storing RGB images in memory, or running algorithms in real-time to automate detection of eating, greatly impacts battery life. Since eating moments are spread sparsely throughout the day, battery life can be mitigated by recording and processing data only when there is a high likelihood of eating. We present a framework comprising a golf-ball sized wearable device using a low-powered thermal sensor array and real-time activation algorithm that activates high-energy tasks when a hand-to-mouth gesture is confirmed by the thermal sensor array. The high-energy tasks tested are turning on the RGB camera (Trigger RGB mode) and running inference on an on-device machine learning model (Trigger ML mode). Our experimental setup involved the design of a wearable camera, 6 participants collecting 18 hours of data with and without eating, the implementation of a feeding gesture detection algorithm on-device, and measures of power saving using our activation method. Our activation algorithm demonstrates an average of at-least 31.5% increase in battery life time, with minimal drop of recall (5%) and without impacting the accuracy of detecting eating (a slight 4.1% increase in F1-Score).